Readability and reliability of Rhinology patient information on Google

Introduction

Access to the internet has rapidly increased in recent decades with reach now to almost 60% of the global population (1). In Western societies, this rate is further increased to 90% with access to the internet expected in the general population (1,2). The internet allows near instant access to large quantities of largely unregulated information. Google is comfortably the most popular search engine, having a 92.6% market share (3,4). Studies have indicated that up to 70% of patients access the internet to read health-related information (5). Different opinions exist among clinicians with one study of internet use amongst a population of General Practice patients concluding that it leads to a better mutual understanding of symptoms and diagnosis (6). There is however also a tendency amongst health care professionals to discourage patients from using internet searches to access clinical information (7).

Studies across several specialties have explored the reliability of online resources accessed by patients for their medical conditions and associated treatments (8-12). However, the literature available regarding the quality of online patient health information in Otolaryngology is scarce (13). Sinus conditions affect a large section of the population and are commonly encountered in all levels of health care (14). The body of material available on the internet related to sinusitis and endoscopic sinus surgery has not been well studied.

The usefulness of a health resource to patients depends on both the readability and the reliability. Readability is defined as how easy a text is to understand and reliability is how trustworthy and consistent the information presented is. A number of objective tools and scoring criteria have been developed to measure this. Lack of either factor could compromise the patients understanding and ultimately impact on their health care. We hypothesise that online resources in Rhinology that are available to patients are not appropriately readable and reliable. The aim of the study therefore is to objectively assess the readability and reliability of information relating to sinusitis and endoscopic sinus surgery currently available on Google to the general public.

We present the following article in accordance with the TREND reporting checklist (available at http://dx.doi.org/10.21037/ajo-21-2).

Methods

Ethics committee approval was not required for this study.

Internet search (Table S1)

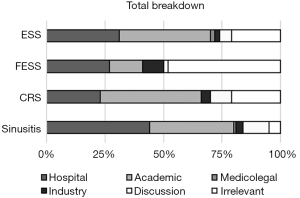

The four search terms: “Sinusitis”, “Chronic rhinosinusitis”, “Endoscopic sinus surgery” and “FESS” were used in the Google search engine. The search was performed through a Google Chrome browser on 03 September 2019 in Hamilton, New Zealand. A Waikato Hospital computer was utilized without logging into a Google account. The first 100 consecutive websites for each search term were recorded, generating a total of 400 websites.

Each website was allocated to one of five general categories: “Hospital” which includes websites generated by public or private hospitals, Ministry of Health, medical associations or individual physicians. “Academic” which primarily includes journal articles and book chapters. “Medicolegal” encompasses insurance companies for both patients and surgeons. “Industry” includes pharmaceutical and medical device companies. Finally, “Discussion articles” refer to news and magazine articles, blog and social media posts.

The individual websites were then reviewed and scored for readability and reliability by a single author.

Readability

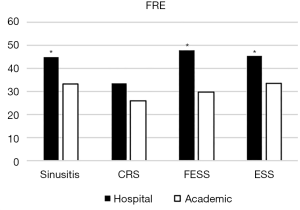

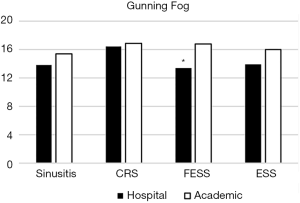

The readability of a website was measured through three objective scoring criteria: the Flesch Reading Ease score (FRE), the Flesch-Kincaid Grade level (FKG) and Gunning Fog score (GF). The FRE was created by Rudolf Flesch who joined with J. Peter Kincaid in 1975 to create the FKG for the US navy. The three scores indicate the level of English education required for comprehension of the text. The FRE ranges from 0 to 100 where 0–30 equates to a College graduate and 90–100 is equivalent to 5th grade. The FKG corresponds directly to the US grade level and a score over 12 indicates college level requirement. GF score predicts the number of years of education needed for comprehension. A score of over 17 equals college level education (Table 1).

Full table

Reliability

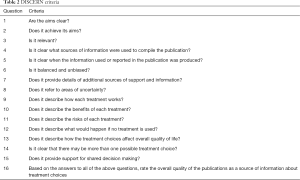

The credibility and reliability of the websites are scored through the DISCERN score, JAMA score and HONcode. The DISCERN score was created by a team of experts from different areas in health. It consists of 16 questions, each with a possible score between 1 and 5, generating a total score between 16 and 80. The first eight questions focus on the reliability of the text and the second eight questions focus on the treatment (15) (Table 2).

Full table

The JAMA benchmark criteria were published in 1997 and assesses website quality through authorship, attribution, disclosure and currency (16). It provides a simple mechanism to quickly review the basic standards expected of a website. Each criterion is scored zero or one with a total score between 0 and 4.

The Health on Net Code is a not-for-profit organization established in 1995 with the aim of filtering the World Wide Web for trustworthy health information online (17). Presence of the code on a website would suggest more reliable health content.

Analysis

Websites were considered irrelevant if the information was inconsistent with the search term or had restricted access. Journal articles were excluded from analysis if the full article was not accessible. The relationship between readability and reliability criteria were also reviewed. Statistical analysis was carried out using the Minitab 18 software. A 5% level of statistical significance was used for all tests with P<0.05. One way analysis on variance was used to test the equality of the mean scores for the different categories. If the assumptions of the ANOVA test did not hold, the Kruskal-Wallis test was used to the hypothesis that the scores were identical. Two sample t-test was used to test the equality of the means for the first 50 samples vs. the rest. The relationships between scores were investigated using two-way plots and regression.

Results

Sinusitis and chronic rhinosinusitis generated over 12 million results each while FESS and endoscopic sinus surgery generated 8.3 and 5.6 million results respectively.

Sinusitis

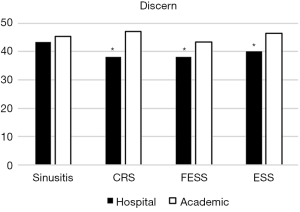

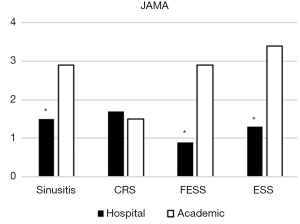

The hospital and academic categories had the most website numbers with 44 and 36 respectively. The mean FRE score for hospital generated resources was significantly higher (44.9±4.84 vs. 33.2±5.36, P<0.01). There was no difference detected in FKG and GF readability scores between the two categories (11.6±0.98 vs. 13.2±1.08, P=0.24 and 13.8±1.00 vs. 15.4±1.11, P=0.35). Academic resources were found to be more reliable with a significantly greater JAMA score (2.9±0.35 vs. 1.5±0.32, P<0.01) and greater prevalence of HONcode (30.6% vs. 13.6%). No difference in DISCERN score was found between hospital and academic resources (P=0.13). No HONcodes were found in websites of the other categories. Discussion articles had 11 websites and five of the total websites were considered irrelevant (Figures 1-5).

Chronic rhinosinusitis

Twenty-three websites were hospital generated while 43 were academic category and 21 irrelevant. Few websites were categorized as industry or discussion articles (four and nine respectively). The hospital resources had a greater FRE score compared to academic websites, it was however not statistically significant (33.4±6.2 vs. 25.8±4.6). No significant difference in readability was found for FKG and GF (14.2 vs. 14.7, 16.4 vs. 17.0). The academic resources were more reliable with a significantly higher DISCERN score (47.1±3.3 vs. 38.2±4.5, P=0.01) and greater HONcode prevalence (14% vs. 8.7%). The first 50 websites had a significantly higher DISCERN and JAMA score compared to the second 50 for chronic rhinosinusitis (48.2 vs. 39.1, P<0.001, 3.2 vs. 2.7, P=0.04).

FESS

Forty-eight of the 100 websites were irrelevant to sinus surgery with 27 and 14 each for hospital and academic categories. Hospital resources were significantly easier to read compared to academic resources for all three readability scores (FRE 47.8±4.5 vs. 29.7±6.3, FKG 10.9±1.0 vs. 14.2±1.2, GF 13.4±1.1 vs. 16.8±1.4, P<0.01). While easier to read, the hospital resources were significantly less reliable through both the DISCERN score (38.1±4.3 vs. 43.3±5.8, P=0.05) and JAMA (0.86±0.33 vs. 2.90±0.40, P<0.01) compared to academic websites. Seven-point-one percent of hospital websites had HON code certification. The JAMA score was significantly greater in the first 50 websites at 2.3 compared to 1.7 for the second 50 (P=0.02).

Endoscopic sinus surgery

There were 31 and 39 websites for the hospital and academic categories while 21 were found to be irrelevant. Readability was significantly different for hospital resources for the FRE score (45.5±5.7 vs. 33.4±5.1, P=0.04) but not significantly different for FKG (P=0.11) and GF (P=0.09). The academic resources were again significantly more reliable for DISCERN (46.4±3.3 vs. 40.2±3.5, P=0.03), JAMA criteria (3.4±0.3 vs. 1.3±0.3, P<0.01) and HONcode (12.5% vs. 3.1%).

An analysis of the relationship between the reliability scores (DISCERN and JAMA) and readability scores (FRE and FKG) did not show any significant correlation with maximum R2 being 12.6%. The search terms sinusitis and chronic rhinosinusitis had 14 overlapping websites while FESS and endoscopic sinus surgery had 12 websites overlapping. The subcategories of medicolegal, industry and discussion articles occurred infrequently and analysis was not included.

Discussion

Vast amounts of health information are available and easily accessible on the internet. Patients are increasingly using this information as an adjunct towards their health care. Rather than a barrier to clinicians, health information “Googled” by patients should ideally be utilized as a resource to complement management. However, in recent years, many specialties including orthopedics, gynaecology and psychiatry have consistently found the available online resources to be presented at a difficult reading level and lacking in quality (8-12).

Doubleday found resources on thyroid treatment to be fair in quality and beyond the reading level of average patients (18). Yi and Hu found similar results for vocal fold injections (19). Interestingly, Shetty analyzed six specific online medical websites for otitis media and found both the readability and reliability to be adequate (20). The readability for patient material in endoscopic sinus surgery was found to be written above the recommended education level (21). With the exception of Cherla’s study, very little is known currently however about the quality of Rhinology information online.

While literacy among the 37 developed high income economy countries of the Organisation for Economic Co-operation and Development (OECD) approaches almost 100%, health literacy levels are far lower (22). The National Assessment of Adult Literacy (NAAL) conducted a large-scale survey within the US in 2003. One-third of the population were found to have basic or below basic health literacy skills. Those who are aged above 65, Black or Hispanic are at particular risk (23). A Ministry of Health survey found New Zealanders to have a poor health literacy overall (24).

Across all search terms and categories, our data has found college attendance to be the minimum level of education required for comprehension. Information from academic sources for chronic rhinosinusitis and FESS are presented at a college graduate level. The latest Census data from the US found 61% of the population aged over 25 with some college education and only 35% having completed college with a bachelor’s degree (25). This portrays a significant disconnect between the online health information and its targeted population. Based on the Census data, with 90% of the adult population, high school level education should be targeted for online resources. In our study, no individual category had a readability of this level.

Reliability of a website contains a number of factors including clear authorship, publication date, references, relevance and accuracy. While no single group of criteria encompasses all the factors, the use of DISCERN, JAMA and HONcode status provide a useful objective tool for comparison. The challenge in online health information is finding the balance between providing information patients can understand and not providing enough information.

Geographic data variation is noted as a limitation of this study. With the search completed in New Zealand, a number of the websites encountered were generated locally. These websites are unlikely to be encountered through similar search terms globally. However, as per the websites listed only 22 out of 400 websites were New Zealand-based and so we consider our results to be broadly representative of search findings worldwide. We found that while the targeted demographic varied depending on the website origin, the mean readability and reliability was not significantly different. We also note the possibility of individual search variation due to the personalised Google search function and development of smart technology. This is however unlikely to impact on the overall quality of the websites. Secondly, the data collection and scoring was completed by a single author. This may contribute to a scoring bias, however the consistency in scoring interpretation was viewed as an advantage and removes potential interpersonal differences.

Summary

Our study recognizes the lack of current online health information in Rhinology with appropriate reading ease and reliability. Patients need college level education only to encounter poor to fair quality resources. These data challenge Rhinologists to in the short-term consider assisting patients with their online searching and in the longer-term to create online high-quality patient resources with content that is readable and reliable.

There is a further notable challenge. Even if a website fulfills the criteria for readability and reliability, it may not be found amongst the large volume of websites returned by the search terms. As such the provision of high-quality resources may be best in centralised online spaces which are easily found by patients.

Conclusions

Online resources are rapidly developing into a key component of a patient’s medical journey. Patients searching for online health information in Rhinology are hindered by the vast number of websites which are irrelevant, difficult to comprehend, inaccurate or unreliable. Clinicians should aim to take additional responsibility by being familiar with relevant and reliable online Rhinology resources so suitable suggestions could be provided to all patients. Clinicians generating future online resources should take note of this to avoid further complicating the patients Google search in an already congested world wide web.

Acknowledgments

Funding: None.

Footnote

Reporting Checklist: The authors have completed the TREND reporting checklist. Available at http://dx.doi.org/10.21037/ajo-21-2

Data Sharing Statement: Available at http://dx.doi.org/10.21037/ajo-21-2

Conflicts of Interest: All authors have completed the ICMJE uniform disclosure form (available at http://dx.doi.org/10.21037/ajo-21-2). AJW reports research grants from: Waikato Medical Research Foundation, Waikato Clinical Campus Summer Studentship fund and Linsell Richards Education Fund. AJW and JW are developing an open access internet resource for ENT patients (www.ENTinfo.nz) with the assistance of a charitable donation from which no pecuniary benefit will be obtained. The other authors have no conflicts of interest to declare.

Ethical Statement: The authors are accountable for all aspects of the work (if applied, including full data access, integrity of the data and the accuracy of the data analysis) in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved. Ethics committee approval was not required for this study.

Open Access Statement: This is an Open Access article distributed in accordance with the Creative Commons Attribution-NonCommercial-NoDerivs 4.0 International License (CC BY-NC-ND 4.0), which permits the non-commercial replication and distribution of the article with the strict proviso that no changes or edits are made and the original work is properly cited (including links to both the formal publication through the relevant DOI and the license). See: https://creativecommons.org/licenses/by-nc-nd/4.0/.

References

Internet World Stats - Usage and Population Statistics - European Commission. Flash Eurobarometer 404 (European Citizens’ Digital Health Literacy). In: Cologne GDA, editor. 2015.

- State of the Internet. The State of the Internet in New Zealand. Available online: https://internetnz.nz/sites/default/files/SOTI%20FINAL.pdf

Search Engine Market Share Worldwide - Biggs TC, Jayakody N, Best K, et al. Quality of online otolaryngology health information. J Laryngol Otol 2018;132:560-3. [Crossref] [PubMed]

- Van Riel N, Auwerx K, Debbaut P, et al. The effect of Dr Google on doctor-patient encounters in primary care: a quantitative, observational, cross-sectional study. BJGP Open 2017;1:bjgpopen17X100833.

- Woodward-Kron R, Connor M, Schulz PJ, et al. Educating the patient for health care communication in the age of the world wide web: a qualitative study. Acad Med 2014;89:318-25. [Crossref] [PubMed]

- O'Neill SC, Nagle M, Baker JF, et al. An assessment of the readability and quality of elective orthopaedic information on the Internet. Acta Orthop Belg 2014;80:153-60. [PubMed]

- O'Neill SC, Baker JF, Fitzgerald C, et al. Cauda equina syndrome: assessing the readability and quality of patient information on the Internet. Spine (Phila Pa 1976) 2014;39:E645-9. [Crossref] [PubMed]

- Devitt BM, Hartwig T, Klemm H, et al. Comparison of the Source and Quality of Information on the Internet Between Anterolateral Ligament Reconstruction and Anterior Cruciate Ligament Reconstruction: An Australian Experience. Orthop J Sports Med 2017;5:2325967117741887 [Crossref] [PubMed]

- Perruzza D, Jolliffe C, Butti A, et al. Quality and Reliability of Publicly Accessible Information on Laser Treatments for Urinary Incontinence: What Is Available to Our Patients? J Minim Invasive Gynecol 2020;27:1524-30. [Crossref] [PubMed]

- Arts H, Lemetyinen H, Edge D. Readability and quality of online eating disorder information-Are they sufficient? A systematic review evaluating websites on anorexia nervosa using DISCERN and Flesch Readability. Int J Eat Disord. 2020;53:128-32. [Crossref] [PubMed]

- Maung JKH, Roshan A, Sood S. P183: FESS on the Internet. Otolaryngol Head Neck Surg 2006;135:272-3. [Crossref]

- Beule A. Epidemiology of chronic rhinosinusitis, selected risk factors, comorbidities, and economic burden. GMS Curr Top Otorhinolaryngol Head Neck Surg 2015;14:Doc11. [PubMed]

- Charnock D, Shepperd S, Needham G, et al. DISCERN: an instrument for judging the quality of written consumer health information on treatment choices. J Epidemiol Community Health 1999;53:105-11. [Crossref] [PubMed]

- Silberg WM, Lundberg GD, Musacchio RA. Assessing, controlling, and assuring the quality of medical information on the Internet: Caveant lector et viewor--Let the reader and viewer beware. JAMA 1997;277:1244-5. [Crossref] [PubMed]

- Health on Net Foundation: HON Code. Available online: https://www.hon.ch/HONcode/Patients/Visitor/visitor.html

- Doubleday AR, Novin S, Long KL, et al. Online Information for Treatment for Low-Risk Thyroid Cancer: Assessment of Timeliness, Content, Quality, and Readability. J Cancer Educ 2020; [Epub ahead of print]. [Crossref] [PubMed]

- Yi GS, Hu A. Quality and Readability of Online Information on In-Office Vocal Fold Injections. Ann Otol Rhinol Laryngol 2020;129:294-300. [Crossref] [PubMed]

- Shetty KR, Wang RY, Shetty A, et al. Quality of Patient Education Sections on Otitis Media Across Different Website Platforms. Ann Otol Rhinol Laryngol 2020;129:591-8. [Crossref] [PubMed]

- Cherla DV, Sanghvi S, Choudhry OJ, et al. Readability assessment of Internet-based patient education materials related to endoscopic sinus surgery. Laryngoscope 2012;122:1649-54. [Crossref] [PubMed]

- The World Bank Data. Literacy rate, adult total. Available online: https://data.worldbank.org/

- Cutilli CC, Bennett IM. Understanding the health literacy of America: results of the National Assessment of Adult Literacy. Orthop Nurs 2009;28:27-32; quiz 33-4. [Crossref] [PubMed]

- Korero Marama: Health Literacy and Maori. Ministry of Health. 2010.

- The United States Census. Educational Attainment in the United States. Available online: http://www.census.gov/

Cite this article as: Wu J, Hunt L, Wood AJ. Readability and reliability of Rhinology patient information on Google. Aust J Otolaryngol 2021;4:16.